Problem Statement :

It is possible to copy data from Azure Analysis services from Azure Data Factory (ADF) as stated in the blog : Copy Data from SSAS/AAS through Azure Data Factory.

But this requires us to create a Linked Server via a SQL server and unfortunately in case if MFA is enabled on an Account which is being leveraged for creation of Linked Server to AAS ; one cannot create a linked server for automated jobs (and would only be able to create on an interactive basis)

Is it possible to Copy / Extract data from Azure Analysis service with MFA enabled on AD accounts in an automated way .

Prerequisites :

- MSFT Fabric Dataflow Gen 2

- AAS Tabular Model

Solution :

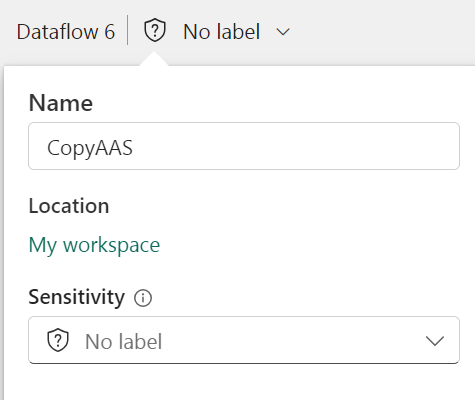

- Login to https://app.fabric.microsoft.com/home?experience=data-factory and Select Dataflow Gen 2.

And rename the Dataflow :

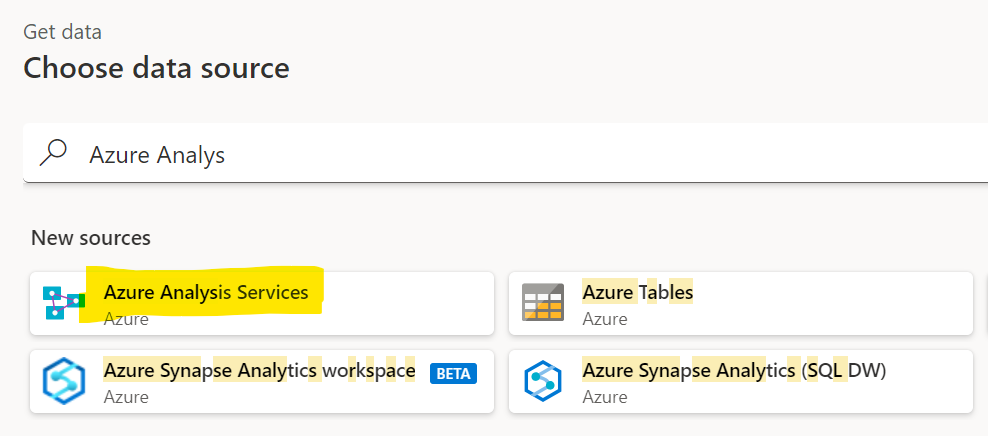

2. Search for Azure Analysis Services components and Select it

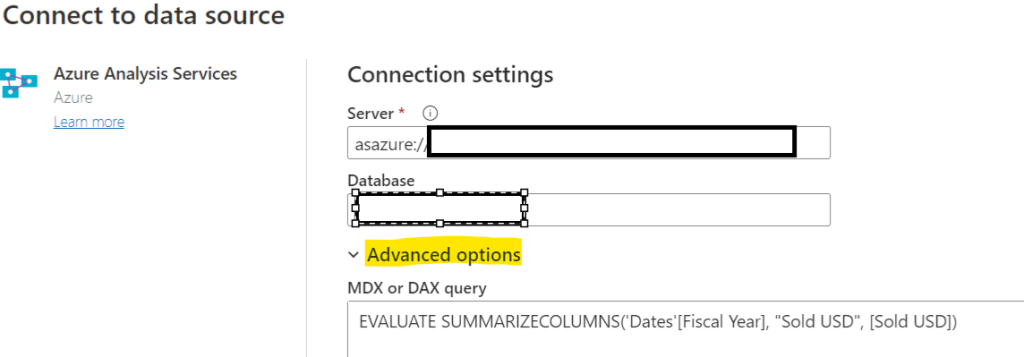

3. Provide the Server and the Database details and under Advanced Options : input the DAX query ( the output which you need to copy from AAS )

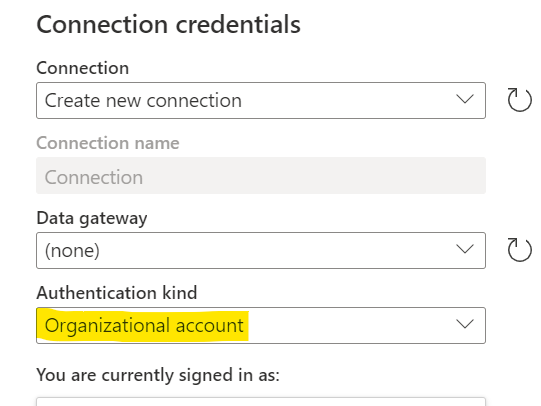

Then create a connection to the AAS via the Organization account and sign in and Click ‘Next’

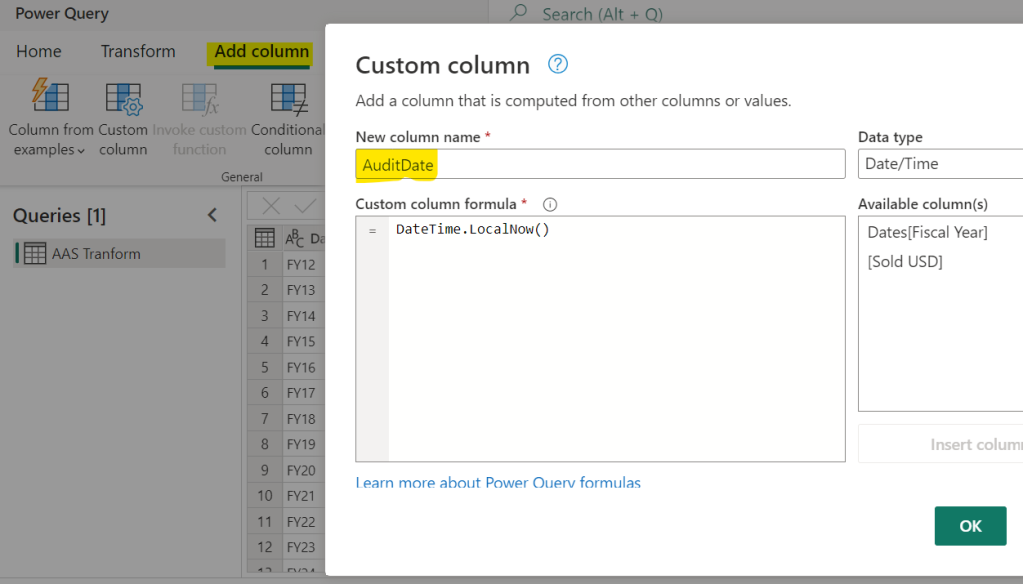

4. Do the necessary transformation that you need in the Power Query mode.

In this scenario, we would add a new column called AuditDate for auditing purpose.

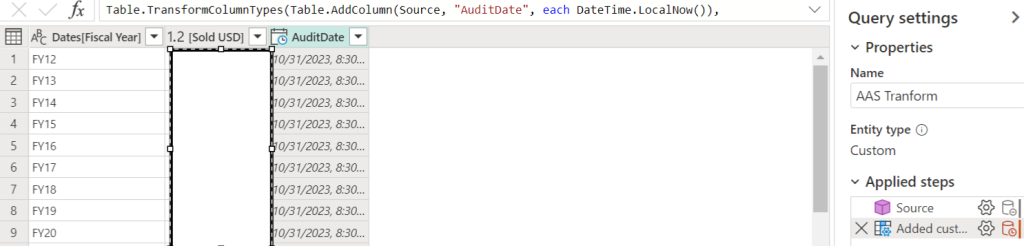

Final Source transformations state :

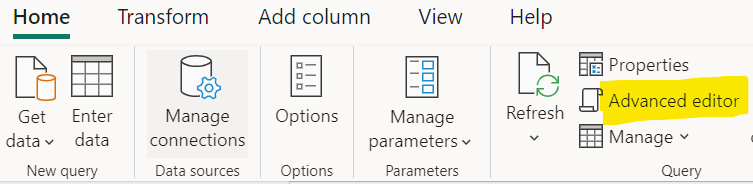

The Advanced editor code :

| let Source = AnalysisServices.Database(“<<ServerName>>”, “<<DatabaseName>>”, [Query = “EVALUATE SUMMARIZECOLUMNS(‘Dates'[Fiscal Year], “”Sold USD””, [Sold USD])”]), #”Added custom” = Table.TransformColumnTypes(Table.AddColumn(Source, “AuditDate”, each DateTime.LocalNow()), {{“AuditDate”, type datetime}}) in #”Added custom” |

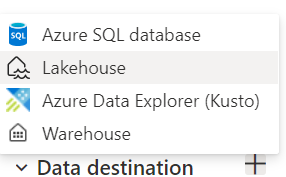

5. Now Select the Sink / Destination settings

As of today only 4 Sinks are supported :

So for our use case, we would Select Azure SQL Database.

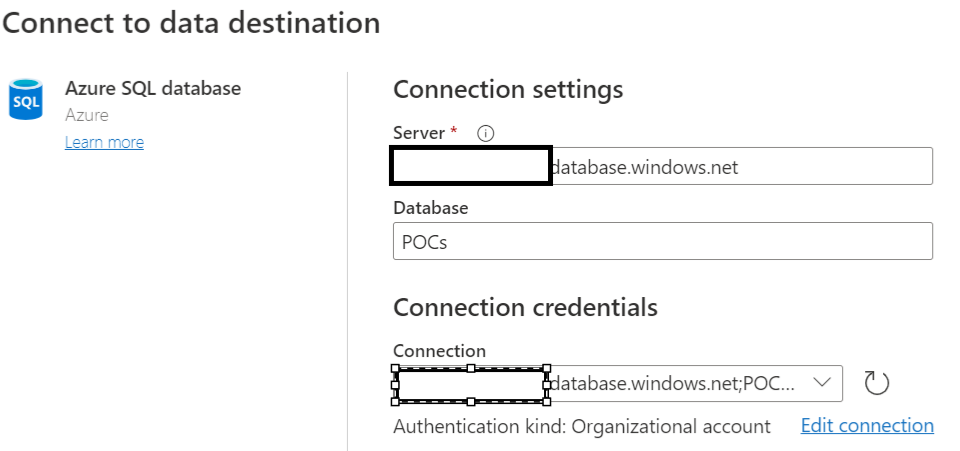

6. Similar to #3, create a connection to Azure SQL Database

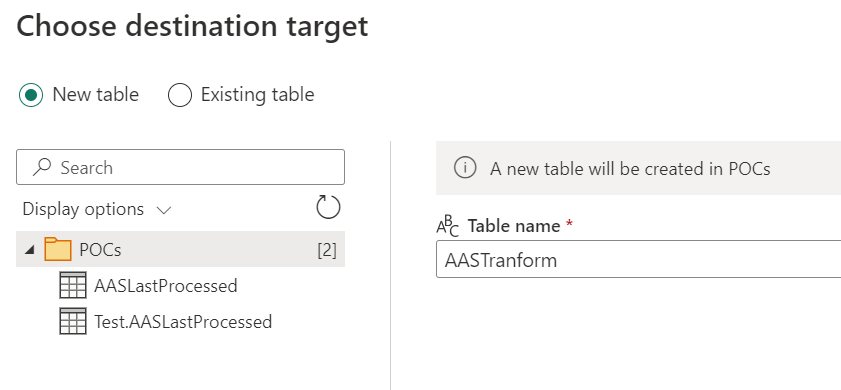

7. You can either create a new table on run or map it to an existing one.

In our use case, we would create a new table in destination with the name AASTransform.

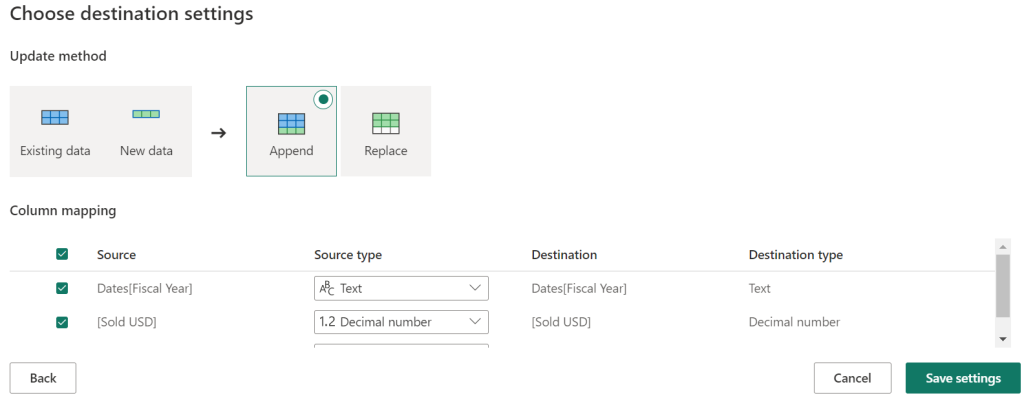

8. One can either append the data or replace the data based on the settings

We would proceed with Append data scenario.

And Click on “Save settings”.

9. Finally Click on “Publish”

10. The Dataflow begins the 1st execution once it’s published.

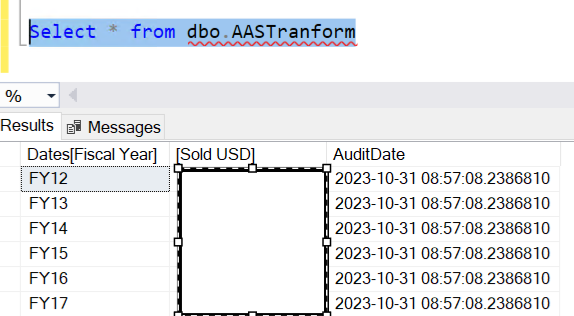

Database output :

11. To Execute again, Click on the Refresh now component of the dataflow.

As we have enabled Append data at sink, we would have 2 distinct AuditDates

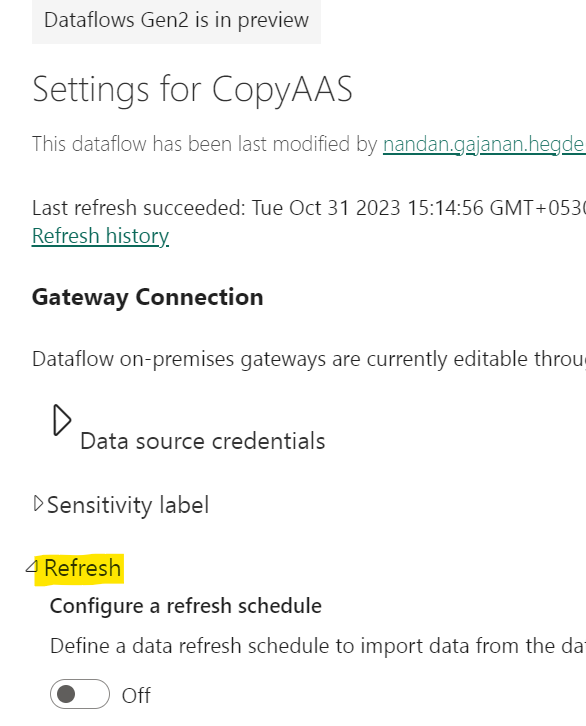

12. In order to schedule the data extraction, one can either schedule the dataflow refresh frequency via Dataflow Settings:

OR schedule via Fabric Data pipelines.