Problem Statement :

As of 21st Sep, 2023 ; pipelines within Synapse / Data factory do not have a timeout functionality because of which there is no out of box functionality to auto cancel / alert / notify long running pipelines within Synapse / ADF.

Is it possible Auto Cancel Long Running Pipelines within Synapse / Azure Data Factory.

Prerequisites :

- Azure Data Factory / Synapse

Solution :

To achieve this functionality, we would have to build our own custom logic as below

GitHUB Code

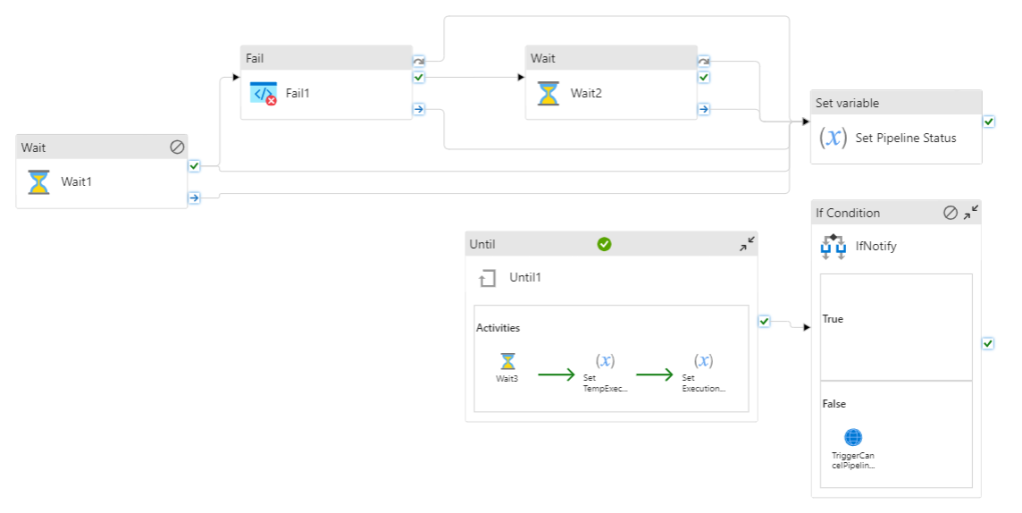

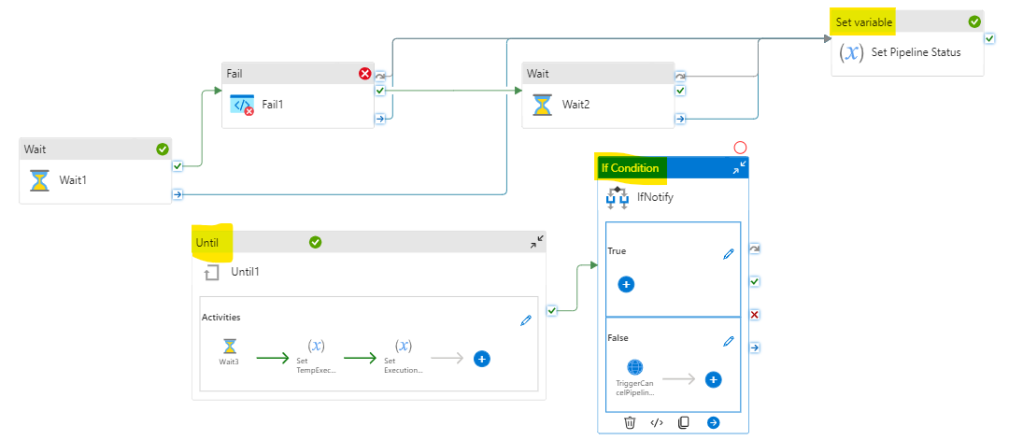

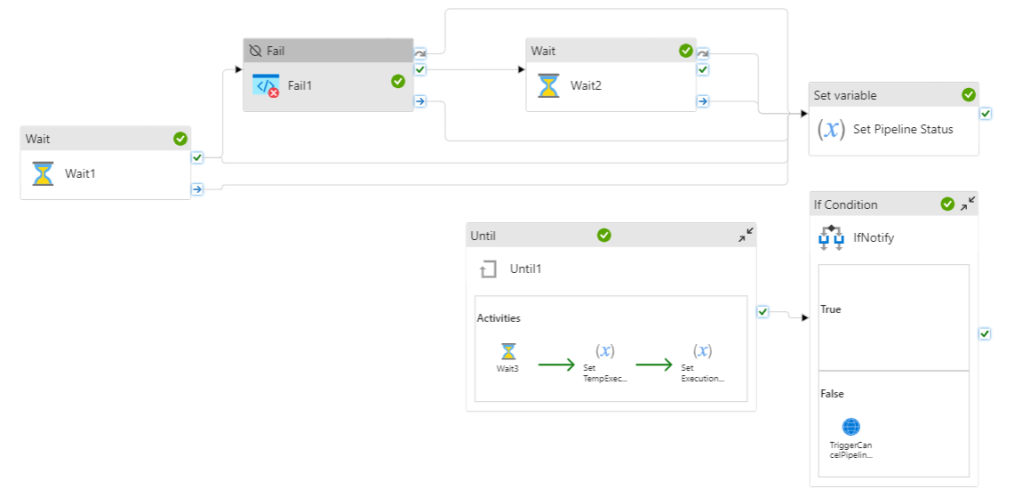

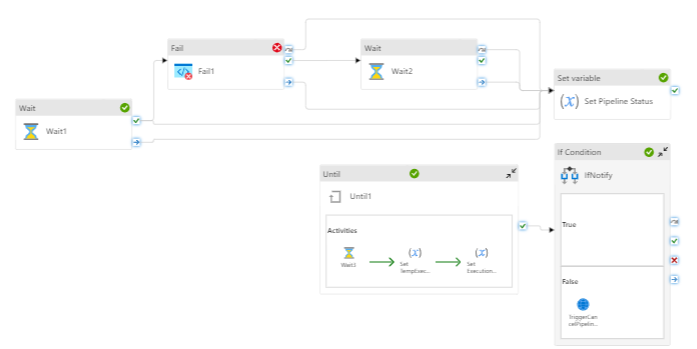

where the activities highlighted in yellow are the additional custom logics that needs to be added within the existing pipeline.

Pipeline Parameters :

where :

a) TimeOutInSec : The timeout value of the pipeline in Seconds

b) QueueTimeInSec : Time Interval in Seconds to validate the pipeline execution time / Status of pipeline

c) SubscriptionID : SubscriptionID of the Subscription hosting the Azure Data Factory / Synapse

d) ResourceGroupName : Name of Resource group hosting the Azure Data Factory / Synapse

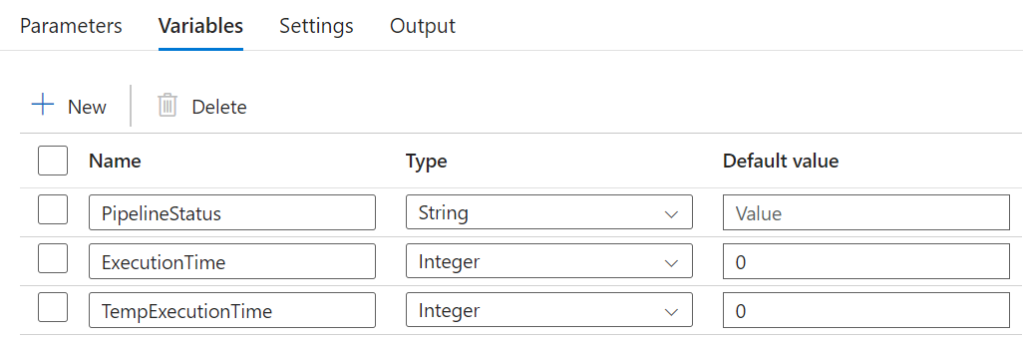

Pipeline Variables :

In the above flow; Wait1, Wait2 & Fail1 activities are the normal pipeline dataflow activities.

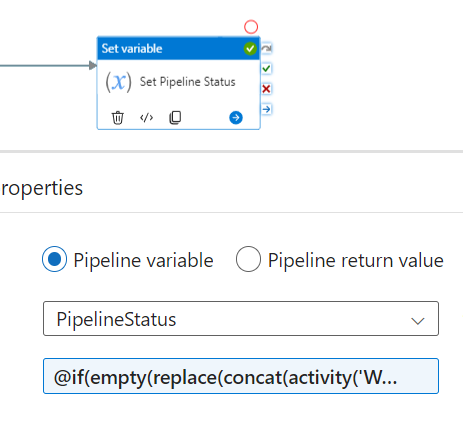

- ‘Set Pipeline Status’ Set variable activity is used to determine the status of the normal flow in case of no timeout scenario (whether the Pipeline is Success or Failure)

Value :

@if(empty(replace(concat(activity('Wait1').error?.message,'^|',

activity('Wait2')?.error?.message,'^|',activity('Fail1')?.error?.message),'^|','')),'Success','Failure')The above value and the integration aspect of the activities in Sequential flow can be understood from the below blog :

Error Logging and the Art of Avoiding Redundant Activities in Azure Data Factory

2. In parallel to your actual flow, we would use Until Activity and IF Activity to validate whether the pipeline has exceeded the allocated timeout value and take necessary actions.

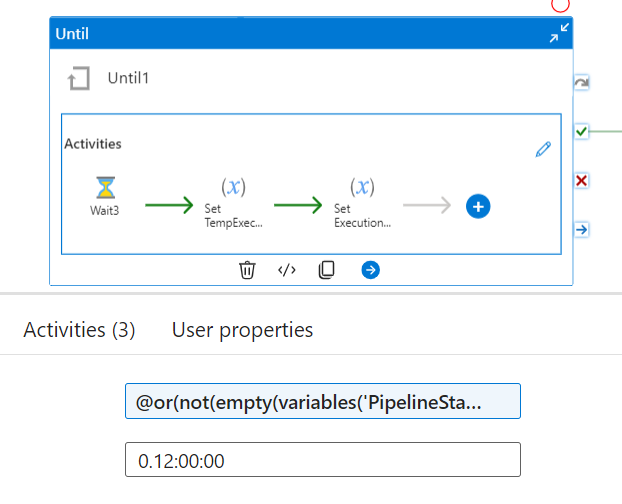

a) Until Activity :

Expression :

@or(not(empty(variables('PipelineStatus'))),greaterOrEquals( variables('ExecutionTime'),pipeline().parameters.TimeoutInSec))where the until activity would iterate till the time either the main flow within the pipeline is completed (success or Failure) OR the pipeline execution is still in progress and has exceeded the timeout allocated for its execution.

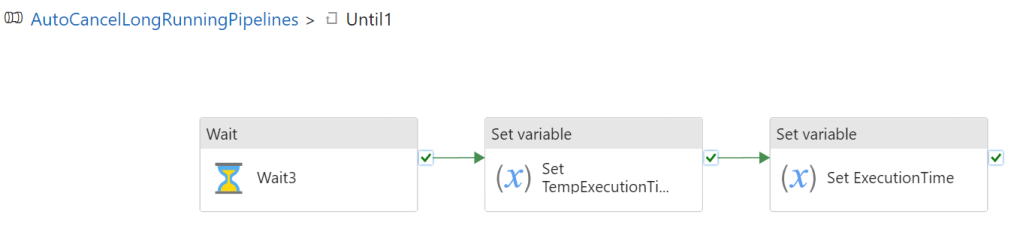

within Until Activity :

where the

i) Wait3 activity is to wait for some time before proceeding for next iteration.

ii) ‘Set TempExecutionTime’ Set variable activity to get the overall execution time till that instance

Value :

@add(variables('ExecutionTime'),pipeline().parameters.QueueTimeInSec)iii) ‘Set ExecutionTime’ Set variable activity to override the ExecutionTime variable

Value :

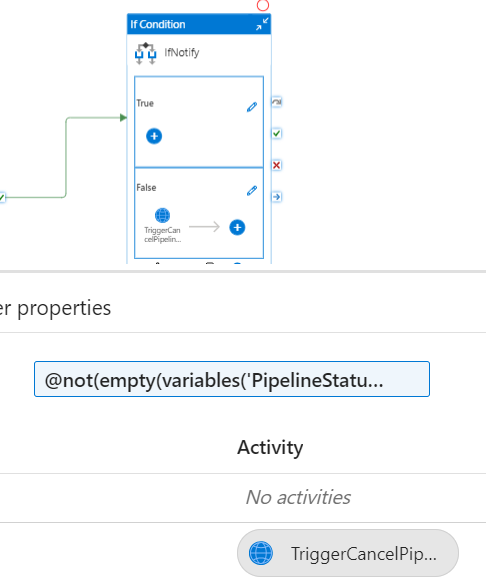

@variables('TempExecutionTime')3. The IF activity is to check whether the Until exit was due to normal pipeline completion (Success or failure) to avoid notification/Cancellation or due to timeout.

Expression :

@not(empty(variables('PipelineStatus')))Validate whether the PipelineStatus variable is empty or not.

In case if it is empty, it means the normal flow of the pipeline is still in progress and has not reached the Set variable activity stage ; thereby exceeding the timeout allocated for the pipelines.

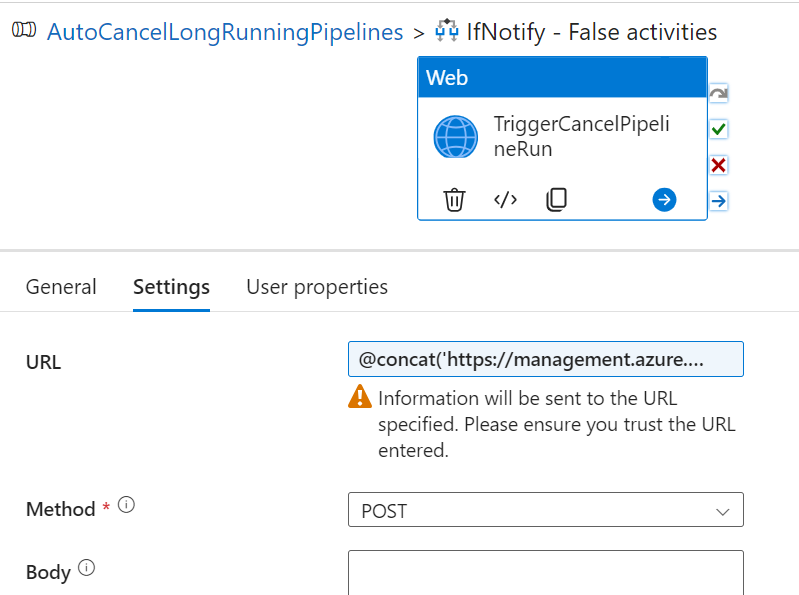

In our use case, to Cancel the pipeline run, we would use Web activity and trigger the pipeline REST API.

The Set up and details to cancel the pipeline run is illustrated in the below article :

Cancel Azure Data Factory Pipeline Runs via Synapse / Data Factory

One can leverage the Logic App and web activity combination to Notify in case of timeout aspect (by overriding the Pipeline cancellation Web activity within the Logic App trigger web activity).

Output :

Scenario 1)

Normal Success within allocated time (disable the fail activity within the example and update the expression in ‘Set Pipeline Status’ activity)

Scenario 2)

Failure within allocated time

Scenario 3)

Pipeline execution exceeding Timeout