Problem Statement :

As such Azure Blob Storage does not provide an API to get storage statistics at the folder level. Each file item in the blob will have a size attribute which will give you the size of the directory / folder.

The Get Metadata activity provides the list of Files and Folders present within the Folder but doesn’t provide the recursive folder structure ( List of files present in the root / base folder )

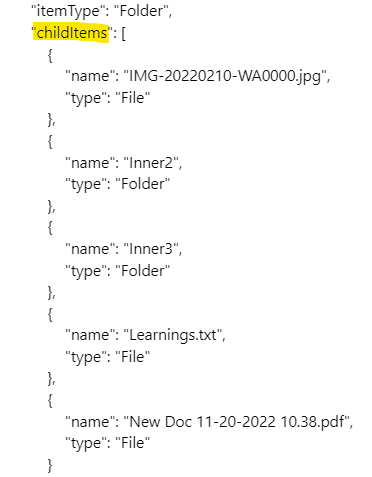

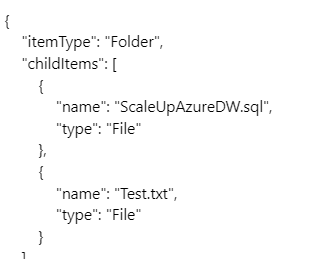

Child Items :

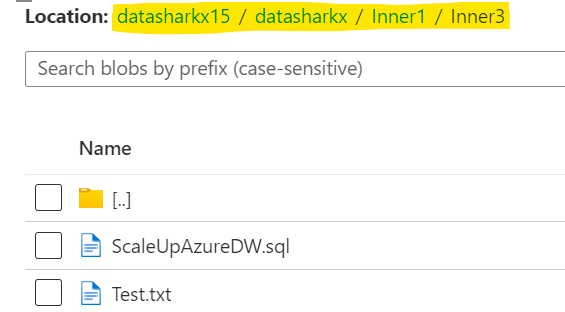

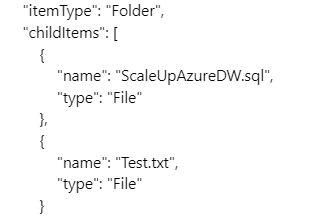

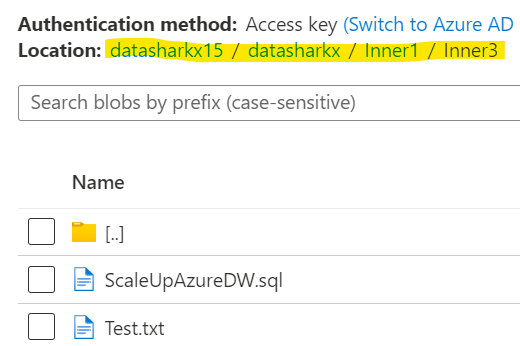

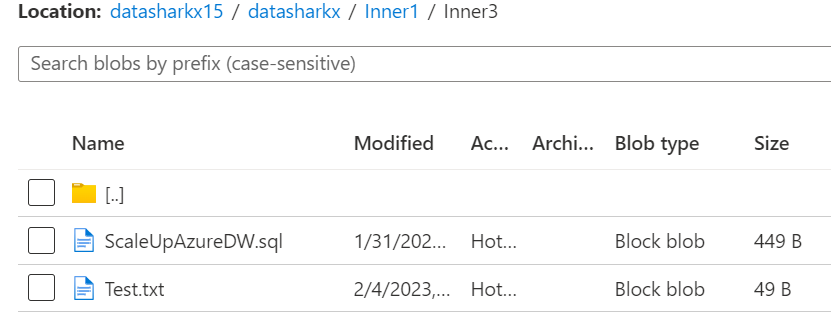

Inner3 Folder iteration :

So how to get the list of files recursively and its size via Synapse / Data Factory Pipeline.

Prerequisites :

- Azure Data Factory / Synapse

- Azure Blob Storage / Data Lake Storage

Solution :

Limitations within Synapse / Data Factory :

a) One cannot use ForEach / Until Activity within ForEach / Until Activity

b) One cannot call the same pipeline via Execute Pipeline Activity

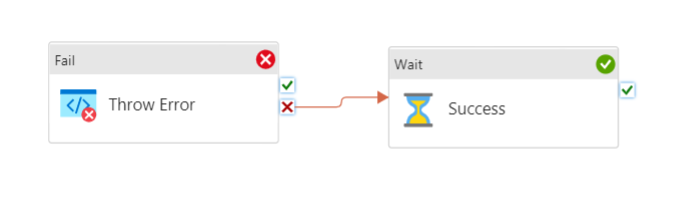

c) One cannot return values from Execute Pipeline Activity in normal successful execution ( Though One can achieve this by throwing the error and getting the error message )

For more details, refer the end section of the below blog :

Error Logging and the Art of Avoiding Redundant Activities in Azure Data Factory

- The idea is to start with the identified path, loop until we scan across all items within the base path recursively.

As we do not know the list of files within the initial path till the base folder during the initial execution, we would leverage Until activity to iterate over the Array which we would be updating throughout the Actvity’s life cycle ( Unlike the ForEach activity which iterates over the Array mapped during the start of its execution )

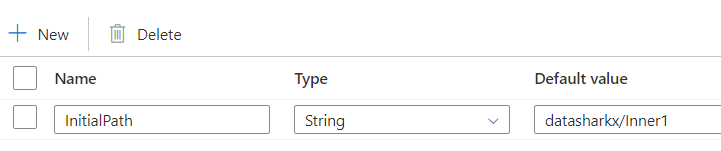

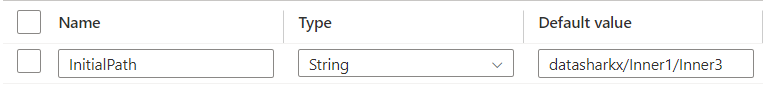

2. The Pipeline uses a parameter via which we would pass the directory / folder whose size we want to calculate :

Variables :

Overall Pipeline Flow :

GitHUB Code

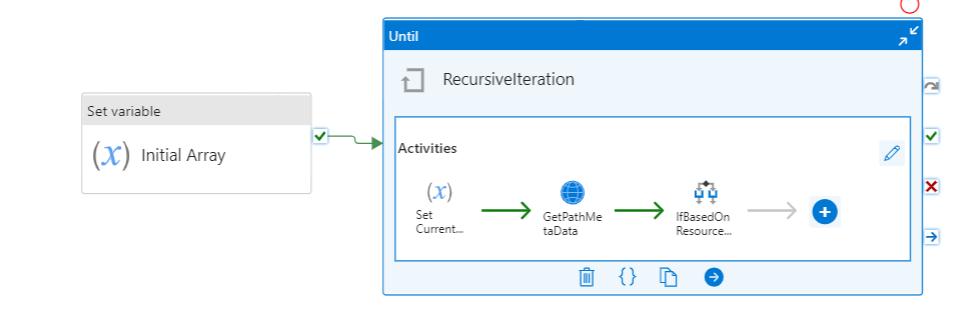

3. Initialize the Array variable InitialArray (which we would be leveraging for Until activity iteration) with the InitialPath provided via Parameter for 1st iteration.

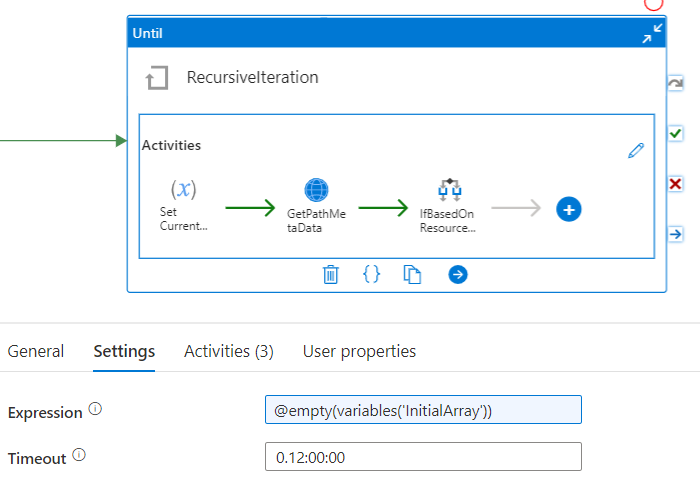

@split(pipeline().parameters.InitialPath,';' )4. Iterate until the InitialArray variable is Empty

Within Until Activity

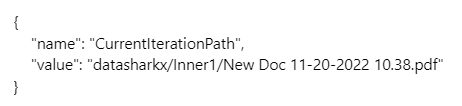

5. Set CurrentIterationPath variable which we would use to get the overall path for further iterations :

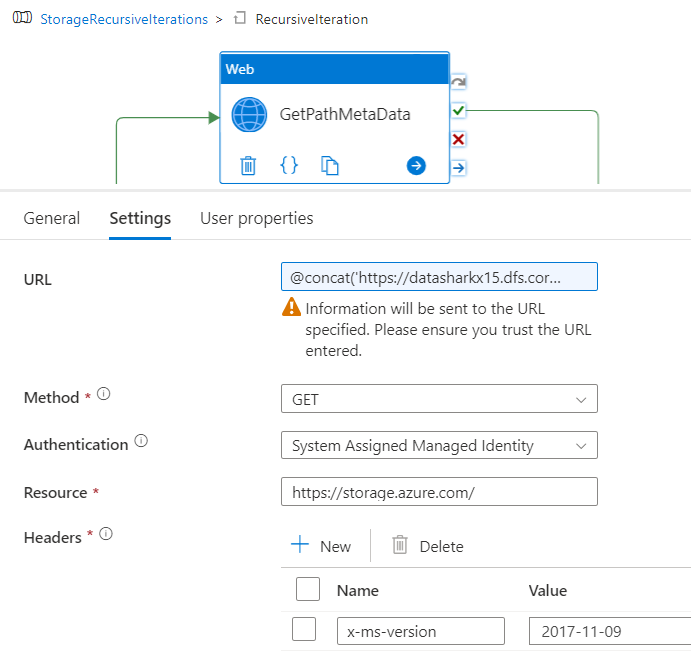

6. Use Web Activity to Get the Resource Type of the Current Iteration Path, whether it is a Folder or File.

URL : @concat('https://<<BlobName>>.dfs.core.windows.net/<<Containername>>/',variables('CurrentIterationPath'))Refer the below blog for Access aspects and getting the Blob Meta Data via Web Activity

Overcoming Limitations of Get Metadata Activity in Azure Synapse / Data Factory

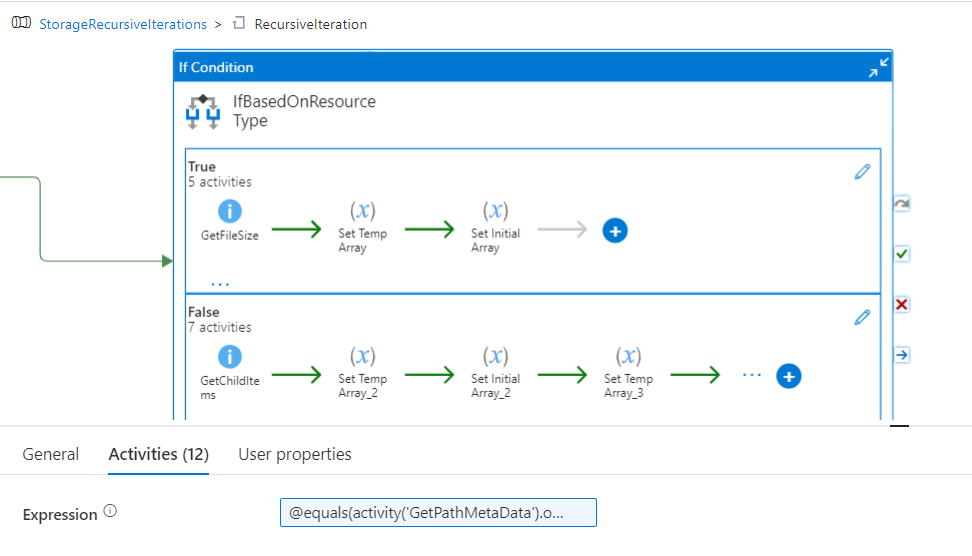

7. Leverage IF Activity based on whether the path is mapping to a File or a Folder/Directory and take actions accordingly.

Expression : @equals(activity('GetPathMetaData').output.ADFWebActivityResponseHeaders['x-ms-resource-type'], 'file')I) If Path type is File :

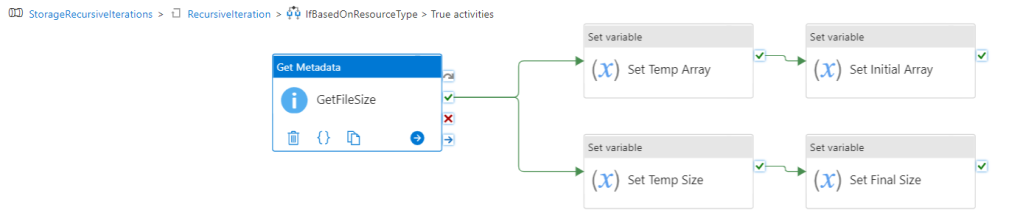

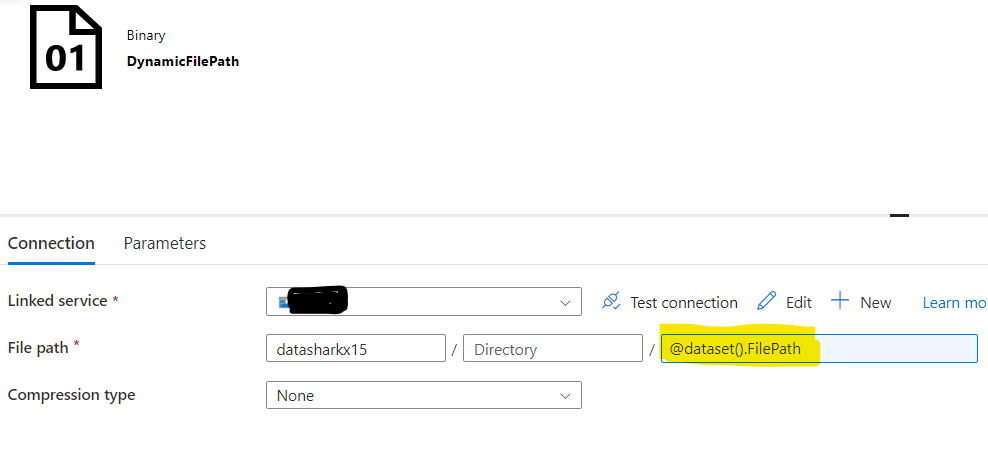

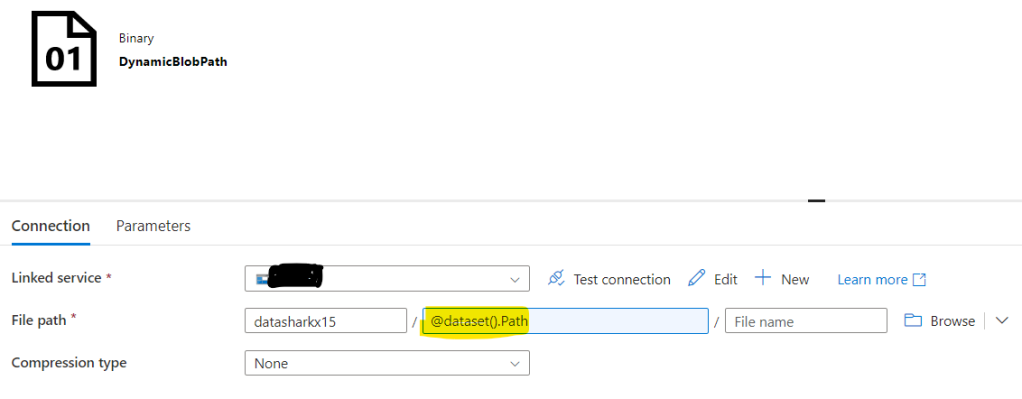

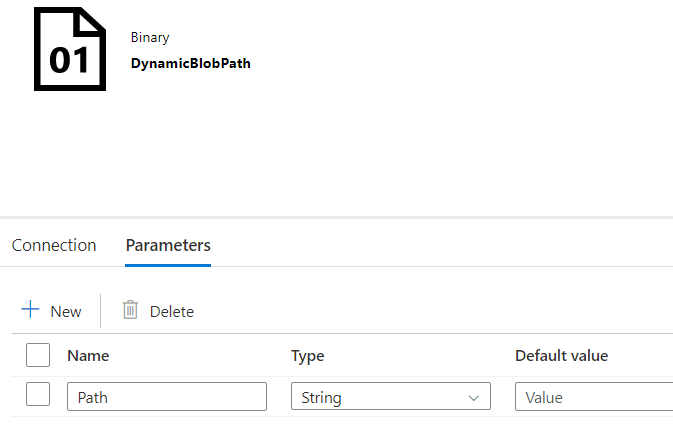

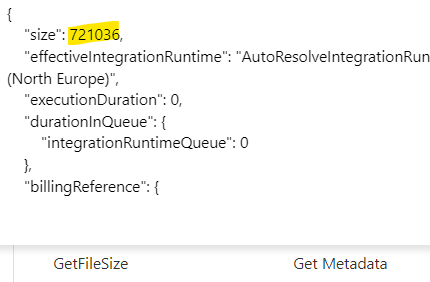

a) Get File Size via Get Metadata Activity

Dataset details :

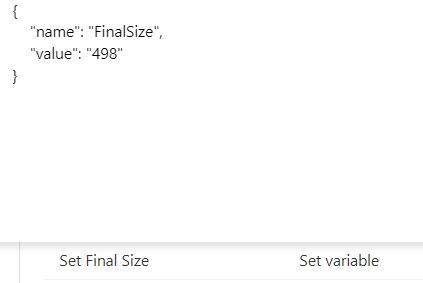

b) The below flow is to sum up the Size of files to calculate the overall Folder Size

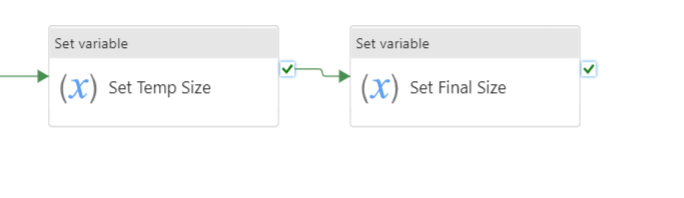

Set TempSize Variable

@string(add(int(variables('FinalSize')),activity('GetFileSize').output.size))Note : ADF has a limitation wherein we cannot reuse the same variable for calculation in which we are trying to assign it.

Reassign the FinalSize variable with the TempSize variable value.

c) The below flow is to update the InitialArray variable post every iteration

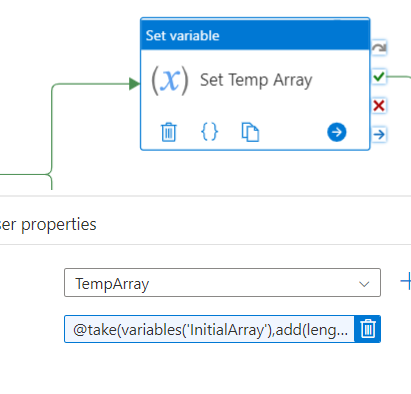

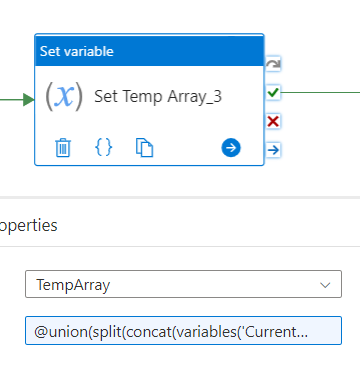

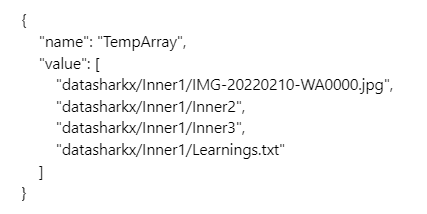

Set TempArray:

@take(variables('InitialArray'),add(length(variables('InitialArray')),-1))This expression returns all elements within the InitialArray except for the last ( we are removing the last element of the array as the Until activity is iterating based on the last element )

Reassign the InitialArray variable with the TempArray variable value.

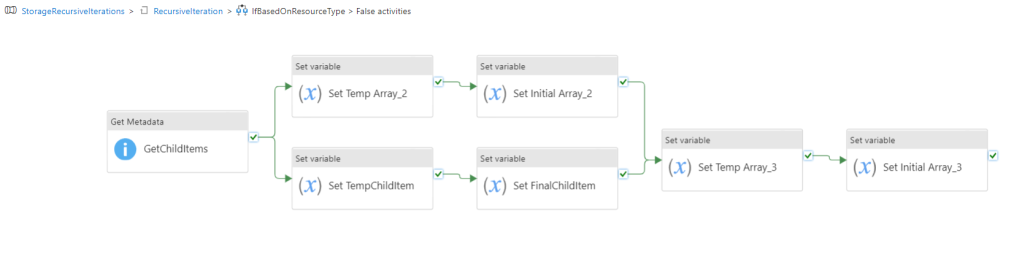

II) If Path type is Folder/Directory :

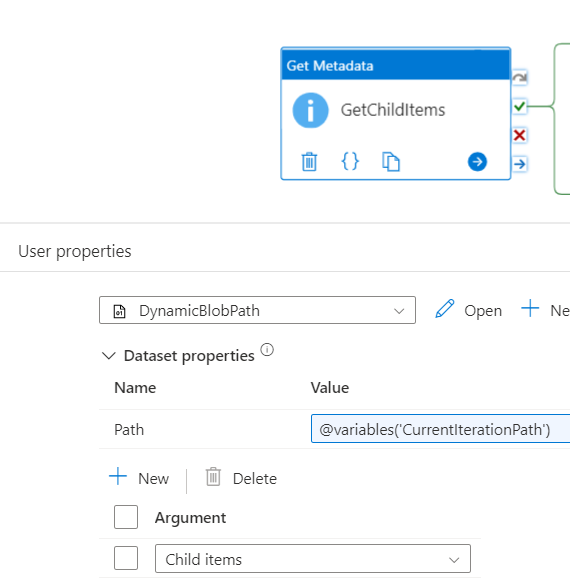

a) Get Child Items via Get Metadata Activity

Dataset details :

b)

Flow 1 is equivalent to #c above to update the InitialArray

c) Flow 2 is to get the overall path for the Child Items rather than just the File Name or Folder Name

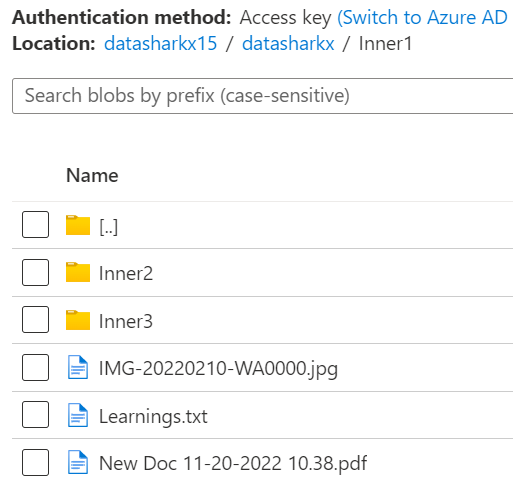

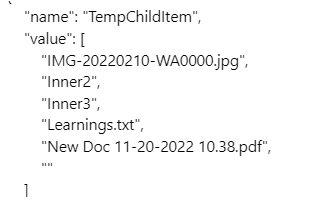

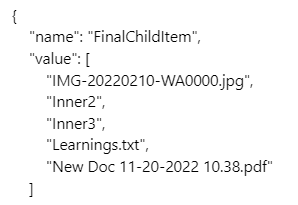

The Get Metadata Activity Child Items output is below :

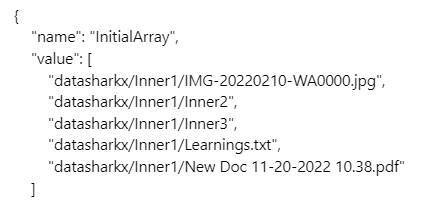

Actual Path :

For Dataset iteration, we need the overall path value to be passed.

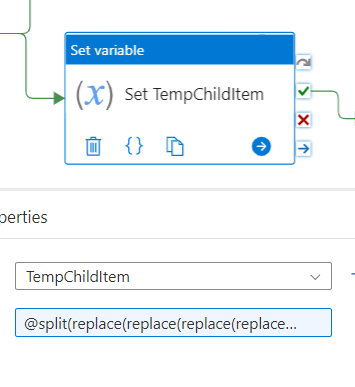

@split(replace(replace(replace(replace(replace(replace(replace(replace(replace(string(activity('GetChildItems').output.childItems),'[{',''),'}]',''),'{',''),'}',''),'"type":"Folder"',''),'"type":"File"',''),'"',''),'name:',''),',,',','),',')Convert the output of get metadata child items as a string and replace all the unnecessary data with empty string ” using the dynamic content.split the above string with ‘,’ as delimiter to convert it back to array.

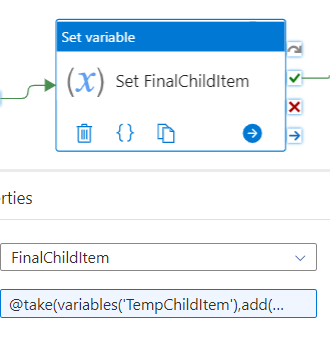

@take(variables('TempChildItem'),add(length(variables('TempChildItem')),-1))Remove the last redundant element within the array.

d) Update the InitialArray by adding the Child Items

@union(split(concat(variables('CurrentIterationPath'),'/',join(variables('FinalChildItem'),concat(';',variables('CurrentIterationPath'),'/'))), ';'),variables('InitialArray'))where we Union the initial Array and the Child Items Array where the ChildItems are appended with the CurrentIterationPath to get the overall path for dataset.

Please refer the below blog for additional details on appending a static value to every element of an Array

Finally reassign the InitialArray variable with the TempArray variable value.

OUTPUT :

A) Initial Path :

Folder Iteration :

Set Variable :

Set Variable :

Set Variable :

File Iteration :

Set Variable :

Get Metadata :

Set Variable :

B) Initial Path :

Results : (449 + 49) = 498 B

Performance :

For #A, to recurse 3 folders and 6 files, it took 4 min 48 sec

For #B, to recurse 1 folder and 2 files, it took 1 min 16 sec

Note :

- The above approach can be used for below scenarios :

- Get Metadata recursively

- Get Size of folder

- Copy all the files from within a folder ( recursive files which includes files within the sub folders as well) into a single folder in flat structure

- It is possible to traverse the folders recursively in Synapse / Data Factory pipelines without having iteration activity within an iteration activity.

- Based on the #Results, the above approach has a bad performance. In case for a good performance, one can write a custom code to get the metadata recursively and do the required tasks.