Problem Statement :

Is it possible to trigger an Azure DevOps Build Pipeline via Azure Data Factory (ADF) / Synapse as there is no out of the box connector for those.

Prerequisites :

- Azure Data Factory / Synapse

- Personal Access Token (PAT)

Solution :

- We would be leveraging Azure DevOps REST API : Runs – Run Pipeline to Trigger the Build pipeline run.

GitHub Code

2. To trigger the REST API, we would need to create a Personal Access Token (PAT) of the user having trigger Build pipeline access within the Azure DevOps project.

a. Login to “https://dev.azure.com/{organization}/” via the Account which has necessary access to trigger a build pipeline.

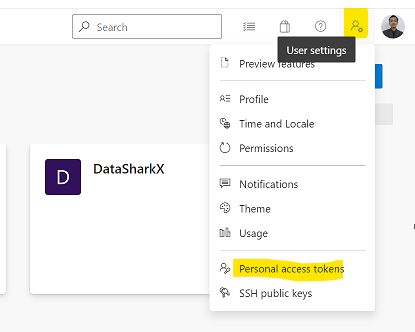

b. Hover to “User Settings” and Select “Personal Access Tokens”

c. Click on “New Token”

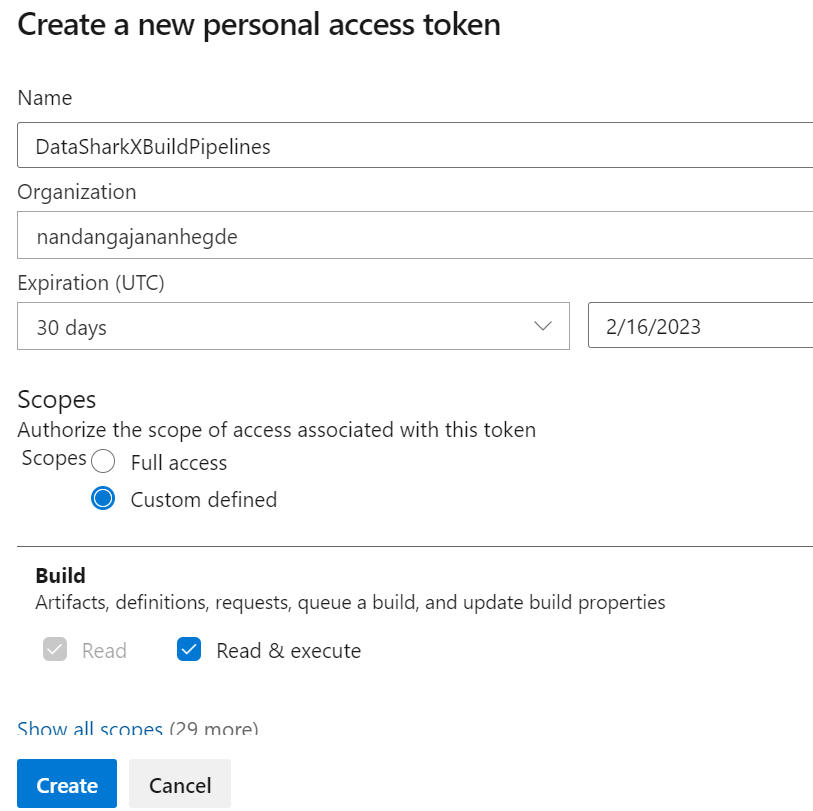

d. Generate PAT with minimum Access privilege by selecting Build Read & Execute permissions.

3. Create 5 Pipeline Parameters

For which below are the values required :

a) Organization: This represents the name of the Azure DevOps organization.

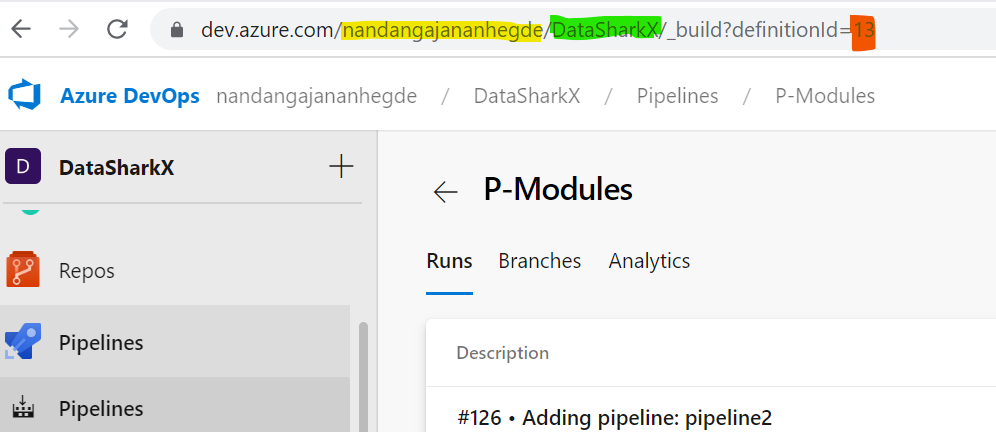

Login to the Azure DevOps and click on the Build Pipeline.

Sample URL format :

https://dev.azure.com/organization/project/_apis/pipelines/pipelineId/

The one highlighted in yellow represents the organization.

b) Project : The one highlighted in Green represents the Project.

c) PipelineId : The one highlighted in Red represents the PipelineId.

d) InputValue : Represents a sample parameter which we can pass to the Build Pipeline at Run time.

e) PATSecretName : Represents the Secret name within the Key Vault that hosts the PAT.

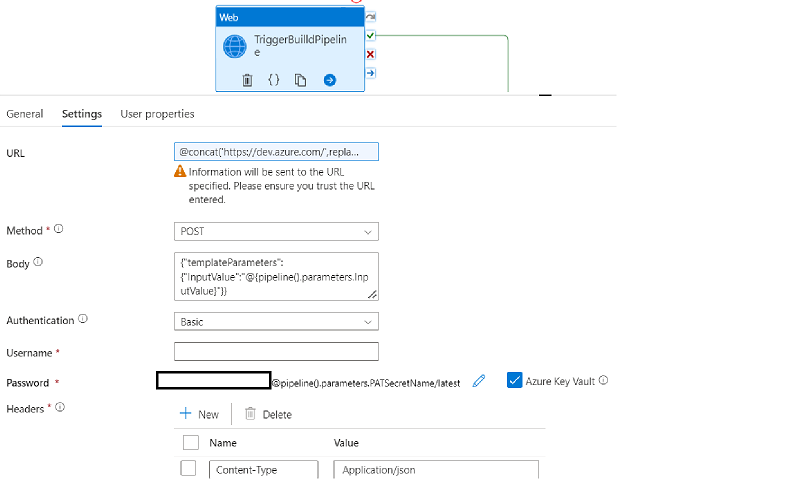

4. Trigger Build Pipeline Config:

URL :

@concat('https://dev.azure.com/',replace(pipeline().parameters.organization,' ','%20'),'/',replace(pipeline().parameters.project,' ','%20'),'/_apis/pipelines/',pipeline().parameters.pipelineId,'/runs?api-version=6.0-preview.1')Method : POST

Body :

{"templateParameters":{"InputValue":"@{pipeline().parameters.InputValue}"}}Authentication : Basic

Username : It can be any value (including Space but shouldn’t be blank)

Password :

Headers :

Content-Type : Application/json5. The Trigger API call is asynchronous. Hence, you do not know whether the Build Pipeline has actually succeeded. The successful execution of the web activity does only mean that DevOps Pipeline was a success. To check the status of the Pipeline one can leverage Web activity to trigger refresh status via REST API.

URL :

@concat('https://dev.azure.com/',replace(pipeline().parameters.organization,' ','%20'),'/',replace(pipeline().parameters.project,' ','%20'),'/_apis/pipelines/',pipeline().parameters.pipelineId,'/runs/',string(activity('TriggerBuilldPipeline').output.id),'?api-version=7.0')Method : GET

Authentication : Basic

Username : It can be any value (including Space but shouldn’t be blank)

Password :

6. We have to add a polling pattern to periodically check on the status of the refresh until it is complete. We start with an until activity. In the settings of the until loop, we set the expression so that the loop executes until the output of the above web activity is equal to Completed.

Expression :

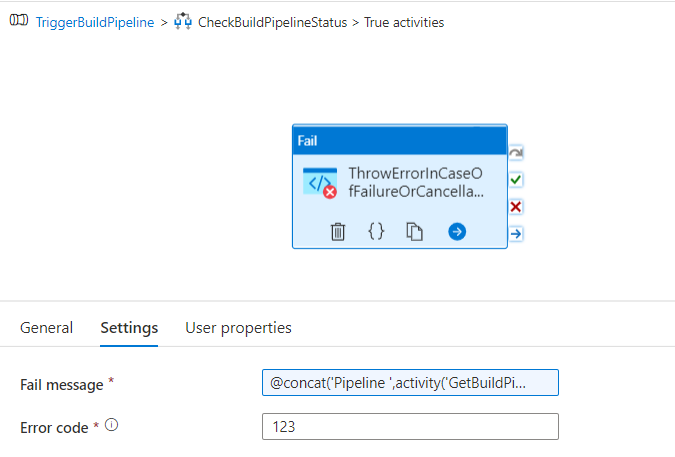

@equals(activity('GetBuildPipelineDetails').output.state,'completed')7. The Final activity is the IF activity that checks the Refresh status and leverages a Fail activity to fail the pipeline in case of DevOps Pipeline failure.

Expression :

@not(equals(activity('GetBuildPipelineDetails').output.result,'succeeded'))

Fail Message :

@concat('Pipeline ',activity('GetBuildPipelineDetails').output.pipeline.name,' ',activity('GetBuildPipelineDetails').output.result)Output :

Case 1 : YAML Pipeline (With Parameter)

Scenario 1: Success

Parameter value : DynamicScope

Web activity output 1st iteration :

Final Iteration :

CASE 2 : Classic Pipeline

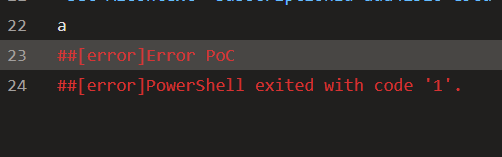

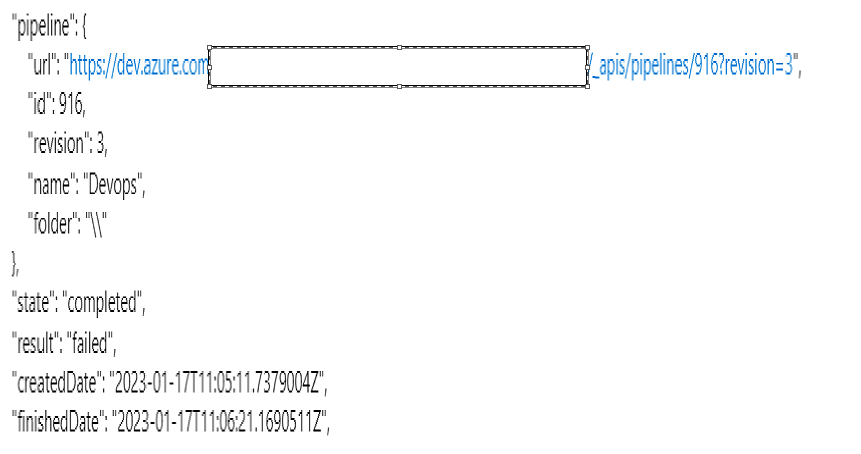

Scenario 1 : Failure

Web Activity Last Iteration :

Scenario 2 : Cancellation

Web Activity Final Iteration :

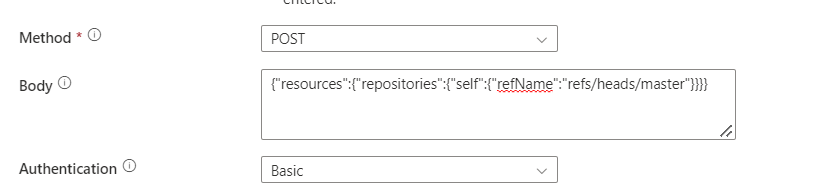

Note : In case of Non Parameterized Build pipeline, once can also use the below Body to trigger the build pipeline:

Body:

{"resources":{"repositories":{"self":{"refName":"refs/heads/master"}}}}