Problem Statement :

Is it possible to copy data from Power BI via Azure Data Factory (ADF) / Synapse as there is no out of the box connector for Power BI.

Prerequisites :

- Power BI Report / Dataset

- Azure Function ( In this blog, we would be leveraging PowerShell Core Runtime Stack )

- Azure SQL Database

Solution :

In my previous blogs Copy Data from Power BI through Azure Data Factory / Synapse Pipeline – Part 3 & Copy Data from Power BI through Azure Data Factory / Synapse Pipeline via Managed Identity Authentication– Part 4 , we saw how we can Web activity & Data Factory / Synapse Dataflow to get data from Power BI data set via REST API.

In this blog, we would be extracting the data via T-SQL from Azure SQL Database.

Note : We would be leveraging Azure SQL Database External REST Endpoints Integration via which we would be triggering an Azure function and Power BI REST API.

- In my previous blog Copy Data from Power BI through Azure Data Factory / Synapse Pipeline – Part 1 we extract the data from Power BI Dataset by generating an AAD Token of the Service Principal which has access on the needed Power BI Dataset.

- We would be following a similar route in the current flow ; wherein we would be triggering a Managed identity enabled Azure Function to generate a AAD Token which we would in turn use to extract the data from Power BI Dataset via API.

Note : The AAD Token can have an expiration time between 60 – 90 min. So rather than preserving that as a Database Scoped Credential, we can generate it at run time by triggering the Azure function (in this scenario)

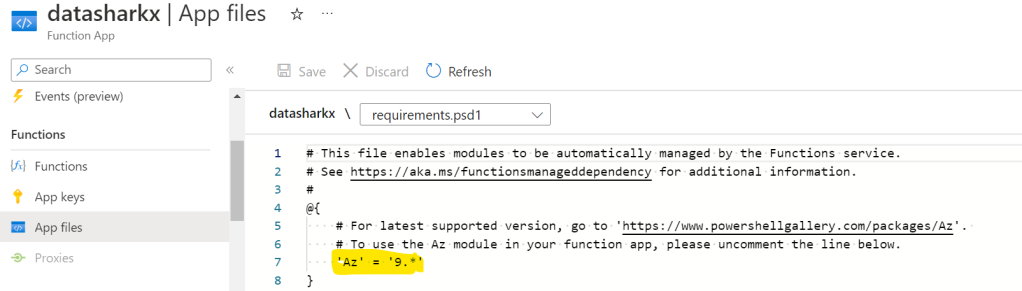

3. Assuming we have a PowerShell Core Runtime Stack Function App set up, update the requirements.psd1 ( present within App files section of App Function) to leverage Az modules.

4. Create a Function of HTTP Trigger type and Function Authorization Level

Enable Managed Identity for the Azure function, which we would need further to provide it access on the needed Power BI Dataset .

Add the below code (for AAD Token creation) in run.ps1 file within Code + Test Section and Save it.

using namespace System.Net

# Input bindings are passed in via param block.

param($Request, $TriggerMetadata)

Connect-AzAccount -Identity

$AADToken=(Get-AzAccessToken -resourceUrl "https://analysis.windows.net/powerbi/api")

# Associate values to output bindings by calling 'Push-OutputBinding'.

Push-OutputBinding -Name Response -Value ([HttpResponseContext]@{

StatusCode = [HttpStatusCode]::OK

Body = $AADToken

})

5. Follow Steps from #1 to #3 stated in Copy Data from Power BI through Azure Data Factory / Synapse Pipeline – Part 1 for providing the necessary access on the Power BI dataset.

6. Login to the Azure SQL database via which we need to execute the T-SQL for Power BI data extraction and execute the queries in the below provided sequence.

a)

CREATE MASTER KEY ENCRYPTION BY PASSWORD = '<<>>'

b)

CREATE DATABASE SCOPED CREDENTIAL [https://<<AzureFunctionName>>.azurewebsites.net/api/<<FunctionName>>]

WITH IDENTITY = 'HTTPEndpointHeaders', SECRET = '{"x-functions-key":"<<FunctionKey>>"}'

Where FunctionKey value is as below :

7. Create a Stored Procedure within the Azure SQL Database which we would trigger via ADF / Synapse.

CREATE PROCEDURE dbo.DataSharkXSP

AS

DECLARE @Appurl NVARCHAR(4000) = N'https://<<AzureFunctionName>>.azurewebsites.net/api/<<FunctionName>>';

DECLARE @Apppayload NVARCHAR(max) = N'{ }';

DECLARE @Token NVARCHAR(max);

DECLARE @Appret int, @Appresponse NVARCHAR(max);

DECLARE @PowerBIurl NVARCHAR(4000) = N'https://api.powerbi.com/v1.0/myorg/groups/<<WorkspaceID>>/datasets/<<DatasetID>>/executeQueries';

DECLARE @PowerBIpayload NVARCHAR(max) = N'{

"queries": [

{

"query": "' + string_escape('Evaluate SUMMARIZECOLUMNS( ''Date''[DateDimId], ''Date''[MonthNm], "CountRows",CALCULATE(COUNTROWS(''Date'')))

', 'json') + '"

}

],

"serializerSettings": {

"includeNulls": true

}

}'

DECLARE @PowerBIret int, @PowerBIresponse NVARCHAR(max);

--To Trigger the Azure Function in order to generate the AAD Token at run time

EXEC @Appret = sp_invoke_external_rest_endpoint

@url = @Appurl,

@method = 'POST',

@payload = @Apppayload,

@credential = [https://<<AzureFunctionName>>.azurewebsites.net/api/<<FunctionName>>],

@response = @Appresponse output;

SET @Token= (SELECT * FROM OPENJSON(@Appresponse, '$.result') WITH

(

"Token" NVARCHAR(max)

))

DECLARE @PowerBIheaders NVARCHAR(4000)=N'{"Authorization": "Bearer '+ @Token + '"}';

--To Trigger the Power BI DataSet refresh REST API

EXEC @PowerBIret = sys.sp_invoke_external_rest_endpoint

@method = 'POST',

@url = @PowerBIurl,

@payload = @PowerBIpayload,

@headers = @PowerBIheaders,

@response = @PowerBIresponse output;

SELECT * FROM OPENJSON(@PowerBIresponse, '$.result.results[0].tables[0].rows') WITH

(

"Date[DateDimId]" nvarchar(100),

"Date[MonthNm]" varchar(100),

"[CountRows]" int

)Output / Result of Final Query within the above Stored Procedure :

Query 1 :

SELECT * FROM OPENJSON(@PowerBIresponse, '$.result.results[0].tables[0].rows') WITH

(

"Date[DateDimId]" nvarchar(100),

"Date[MonthNm]" varchar(100),

"[CountRows]" int

)

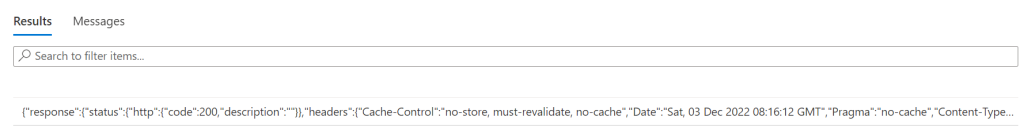

Query 2 :

SELECT * FROM OPENJSON(@PowerBIresponse, '$.result.results[0].tables[0].rows')

Query 3 :

SELECT @PowerBIresponse{“response”:{“status”:{“http”:{“code”:200,”description”:””}},”headers”:{“Cache-Control”:”no-store, must-revalidate, no-cache”,”Date”:”Sat, 03 Dec 2022 08:16:12 GMT”,”Pragma”:”no-cache”,”Content-Type”:”application\/json”,”strict-transport-security”:”max-age=31536000; includeSubDomains”,”x-frame-options”:”deny”,”x-content-type-options”:”nosniff”,”requestid”:”3e6b00d9-0fde-454a-a95c-1a26ad986aec”,”access-control-expose-headers”:”RequestId”,”request-redirected”:”true”,”home-cluster-uri”:”https:\/\/wabi-west-us-redirect.analysis.windows.net\/”}},”result”:{“informationProtectionLabel”:{“id”:”5fae8262-b78e-4366-8929-a5d6aac95320″,”name”:”Recipients Have Full Control”},”results”:[{“tables”:[{“rows”:[{“Date[DateDimId]”:20000101,”Date[MonthNm]”:”January”,”[CountRows]”:1},{“Date[DateDimId]”:20000102,”Date[MonthNm]”:”January”,”[CountRows]”:1}]}]}]}}

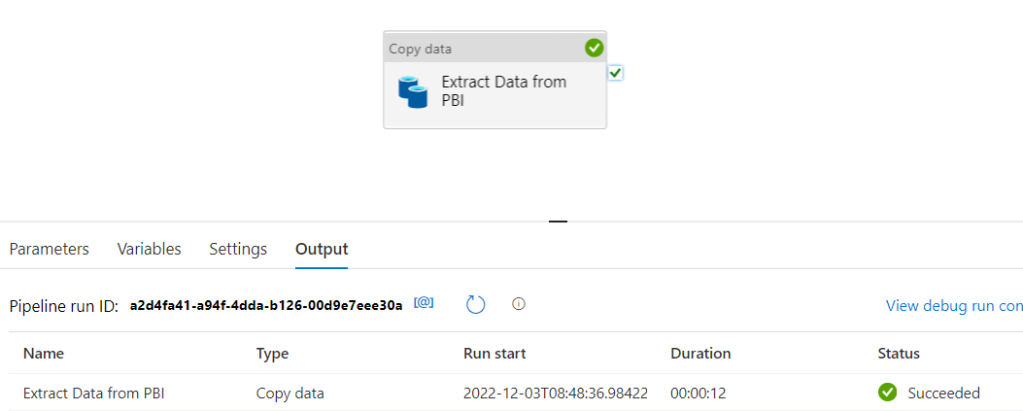

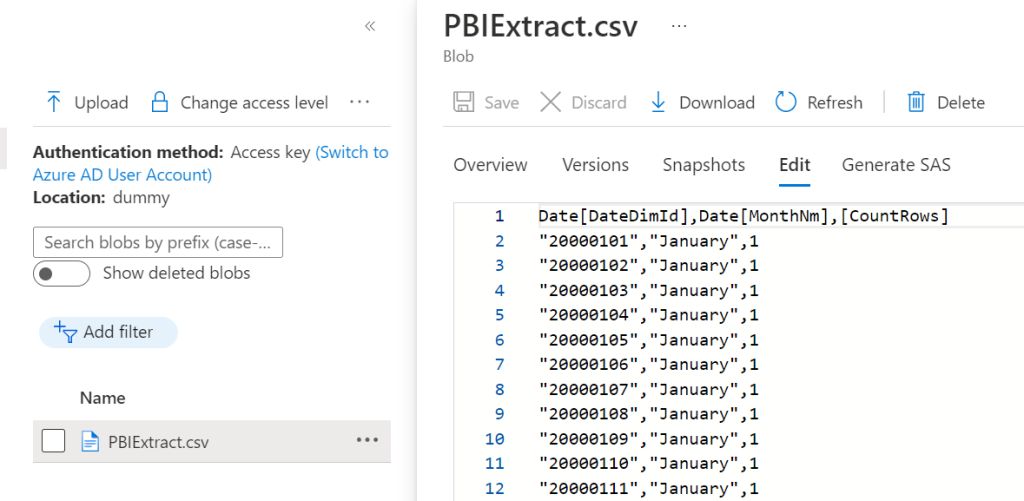

8. Create an ADF / Synapse Pipeline with Copy Activity with Source as the Azure SQL database and Sink (in this case) as Azure Blob storage.

Note : One can Parameterize the Query, Power BI URL within the Stored Procedure and pass it at run time via Azure Data Factory / Synapse Pipeline for a Generic logic.