Problem Statement :

Is it possible to refresh Power BI Dataflow / Dataset via Azure Data Factory (ADF) / Synapse as there is no out of the box connector for Power BI.

Prerequisites :

- Power BI Dataset / Dataflow

- Service Principal

Solution :

Note : Service Principal authentication can be leveraged in case of Cross tenant scenarios wherein the ADF / Synapse Pipelines are in tenant other than the Power BI tenant.

- Allow Service Principal to access Power BI APIs

- Go to the Power BI Service at https://app.powerbi.com and open the Admin Portal.

- Go to the ‘Tenant Settings’ section, and scroll to the setting for ‘Allow service principals to use Power BI APIs’. Enable the setting and Either allow the entire organization to use the Rest APIs (not recommended) or specify an Azure Active Directory Group and make sure your Service Principal is a member of that group

2. Grant the Service Principal, Member access on the workspace hosting the Power BI Dataset / Dataflow.

3. We would be leveraging the Power BI REST API : Datasets – Refresh Dataset and / or Dataflows – Refresh Dataflow for refreshing Power BI Dataset and Dataflow respectively and Datasets – Get Refresh History and / or Dataflows – Get Dataflow Transactions for getting refresh status of Power BI Dataset and Dataflow respectively.

GitHub Code

4. Create 8 pipeline parameters

For which below are the values required :

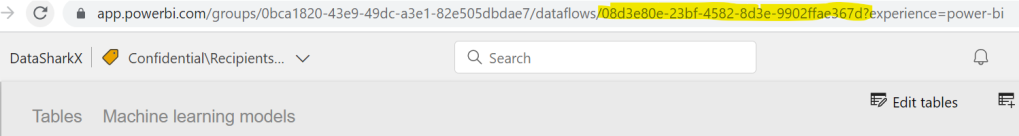

a) EntityId : This represents either the Power BI Dataset / Dataflow Id.

Login to the required workspace and click on the Dataset / Dataflow

Dataset:

Dataflow:

b) EntityType : This Parameter can either be Dataset or Dataflow based on the entity type we are planning to process.

c) WorkspaceId : This is the Id of the workspace hosting the dataset / dataflow.

d) EntityName : This is the dataset / dataflow name which is needed for Messaging purpose in case of failures.

e) TenantId : TenantId in which the Power BI Entity is published.

f) KeyVaultName : Name of the key vault in which the Client Secret of the Service Principal is preserved.

Get Client Secret value from Key Vault Config :

URL : @concat(‘https://’,pipeline().parameters.KeyVaultName,’.vault.azure.net/secrets/’,pipeline().parameters.ClientSecretKeyVaultSecretName,’?api-version=7.0′)

Get AAD Token Config :

URL : @concat(‘https://login.microsoftonline.com/’,pipeline().parameters.TenantId,’/oauth2/token’)

Body : @concat(‘grant_type=client_credentials&resource=https://analysis.windows.net/powerbi/api&client_id=’,pipeline().parameters.ClientId,’&client_secret=’,encodeUriComponent(activity(‘Get Client Secret from Key Vault’).output.value))

Trigger Power BI Refresh Config :

URL : @concat(‘https://api.powerbi.com/v1.0/myorg/groups/’,pipeline().parameters.WorkspaceId,if(equals(toUpper(pipeline().parameters.EntityType),’DATASET’),’/datasets/’,’/dataflows/’),pipeline().parameters.EntityId,’/refreshes’)

Authorization : @concat(string(activity(‘Get AAD Token’).output.token_type),’ ‘,string(activity(‘Get AAD Token’).output.access_token))

5. The Refresh API call is asynchronous. Hence, we do not know whether the dataset/dataflow refresh has actually succeeded. The successful execution of the web activity doesn’t mean that the refresh was a success. To check the status of the refresh one can leverage Web activity to trigger Power BI Dataset / Dataflow refresh status via Power BI REST API

URL : @concat(‘https://api.powerbi.com/v1.0/myorg/groups/’,pipeline().parameters.WorkspaceId,if(equals(toUpper(pipeline().parameters.EntityType),’DATASET’),’/datasets/’,’/dataflows/’),pipeline().parameters.EntityId,if(equals(toUpper(pipeline().parameters.EntityType),’DATASET’),’/refreshes?$top=1′,’/transactions?$top=1′))

Authorization : @concat(string(activity(‘Get AAD Token’).output.token_type),’ ‘,string(activity(‘Get AAD Token’).output.access_token))

We have to add a polling pattern to periodically check on the status of the refresh until it is complete. We start with an until activity. In the settings of the until loop, we set the expression so that the loop executes until the output of the above web activity is not equal to Unknown (in case of dataset) or InProgress (in case of dataflow). When a dataset is refreshing, “Unknown” is the status returned until it completes or fails and when a dataflow is refreshing, “InProgress” is the status returned until it completes or fails.

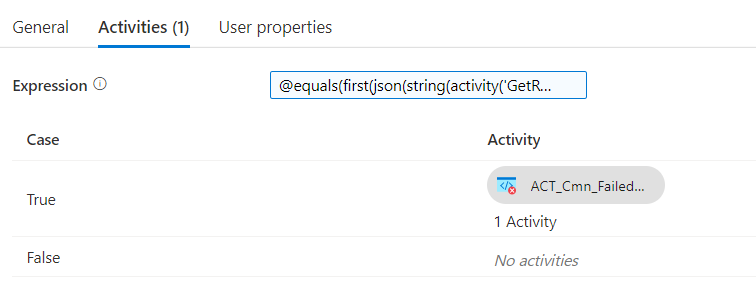

Expression : @not(equals(first(json(string(activity(‘GetRefreshDetails’).output)).value).status,if(equals(toUpper(pipeline().parameters.EntityType),’DATASET’),’Unknown’,’InProgress’)))

6. The Final activity is the IF activity that checks the Refresh status and leverages a Fail activity to fail the pipeline in case of refresh failure.

IF activity expression : @equals(first(json(string(activity(‘GetRefreshDetails’).output)).value).status,’Failed’)

Fail message : @if(equals(toUpper(pipeline().parameters.EntityType),’DATAFLOW’),concat(pipeline().parameters.EntityName,’ failed to refresh’),string(json(first(json(string(activity(‘GetRefreshDetails’).output))?.value)?.serviceExceptionJson)))